Algorithms that convert 2D images into "high-precision 3D images" accelerate the evolution of AI

The beginning of the major swells surrounding current artificial intelligence (AI) technology dates back to 2012. This year, an academic contest was held to compete for how accurately algorithms could recognize objects in photographs.

That year, researchers built an algorithm with a rough hint of how neurons in the human brain respond to new information. He discovered that recognition accuracy could be dramatically improved by loading thousands of images into the algorithm. This groundbreaking discovery has shook the world of academic research and business and is transforming numerous companies and industries.

And now, by training the same type of AI algorithm, a new technology has emerged that transforms a two-dimensional (2D) image into an expressive 3D image. This new technology, which has made the two worlds of computer graphics (CG) and AI turbulent, has great power to transform the way of video games, virtual reality (VR), robotics, and autonomous driving of cars.

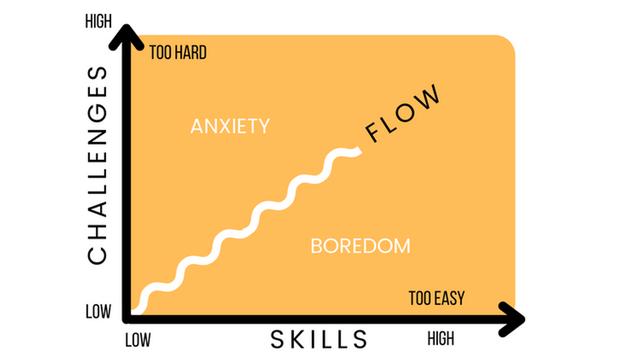

Some experts think that this technology may make computers smarter, more intelligent than humans, understand everything in the world, and argue in a coherent manner. is.

Revolutionary technology with many uses"It's a technology that's getting a lot of attention right now," says Ken Goldberg, a robotics researcher at the University of California, Berkeley (UCB). He is working on using this technology to improve the capabilities of AI-powered robots so that they can grab objects of unfamiliar shapes. He says the technology should have "hundreds of uses" from entertainment to architecture.

The technique used in this new technology is called "neural rendering," which uses a neural network to read several 2D snapshots and generate a 3D image from them. Interest in this technology, which was born from the fusion of various concepts related to CG and AI, increased at a stretch after 20 years. In April of that year, a collaborative research team between UCB and Google demonstrated that the same scene could be reproduced in a very realistic 3D image by simply showing a few 2D photos to the neural network.

Utilizing the movement of light through the atmosphere, this algorithm is designed to calculate the density and color of each data point in 3D space. This made it possible to convert 2D images into realistic 3D images from any angle.

The core neural network of this technology is similar to the image recognition algorithm that analyzes the pixels of 2D images presented at the 2012 academic contest. The newly created algorithm transforms 2D pixels into 3D pixels called "voxels." A video introducing the technique, dubbed Neural Radiance Fields (NeRF for short), has impressed many researchers.

"I've been involved in computer vision research for 20 years, and when I watched this video, I heard a voice saying,'Hey, this is amazing,'" said Frank Delato, a professor at Georgia Institute of Technology.

Anyone who studies computer graphics must recognize the innovation of this technology, Delato says. It usually takes hours of tedious manual work to complete a realistic and detailed 3D image. However, with the advent of new methods, it has become possible to create such 3D images from ordinary photographs in just a few minutes.

![[Excel] How to paste images such as photos and diagrams [Excel] How to paste images such as photos and diagrams](https://website-google-hk.oss-cn-hongkong.aliyuncs.com/drawing/article_results_9/2022/3/9/72539ecbf7413c05e4465b39ca06e8e0_0.jpeg)